Earlier, traditional learning processes involved building a new model for each new task based on the availability of labeled data. This is due to the fact that traditional machine learning (ML) algorithms guessed that test and training data come from the same feature space. In case of distribution changes in data, or a trained model is applied to a new dataset, the user has to retrain a new model from the beginning. Despite the new model being used on similar tasks as the previous model. For example, movie reviews versus song reviews sentiment analysis classifier.

But in the case of transfer learning algorithms, pre-trained models or networks are used as a starting point. The previously trained model’s knowledge (gained during the first source task, like classifying movie reviews) is then used for a new and similar task or data, like classifying song reviews.

This aspect of transfer learning makes it a popular use case in deep learning. It requires comparatively little data to train neural networks. It is also highly useful in data science, since most real-life problems lack millions of labeled data points to train such complicated models.

In this article, we will be discussing what transfer learning is, how it works, where you can use transfer learning, advantages and disadvantages, its applications, popular examples of transfer learning, and much more.

What is Transfer Learning?

Transfer learning is a machine learning method where past-learned knowledge of one task or dataset is transferred to enhance the model performance of another similar task or dataset. It utilizes what is already learnt in the past setup to improve generalization in another setup.

In layman’s terms, in the case of transfer learning, knowledge received from a past task is used to enhance the generalization of another new task. For example, to train a classifier to predict whether an image displays food, you can utilize the past training of the classifier to recognize images with fruits.

How Transfer Learning Works?

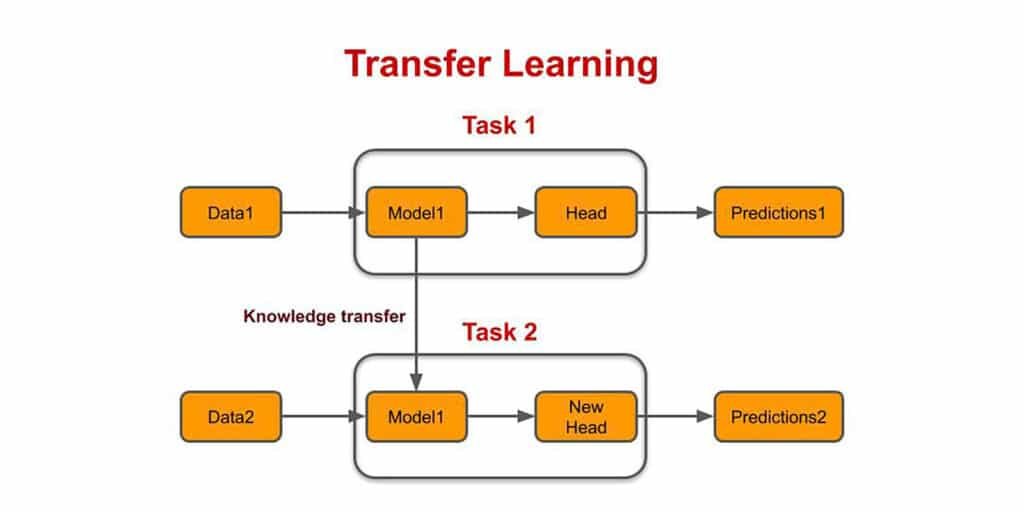

Transfer learning is a structured technique of using a model’s past knowledge to train a new model. It works using the following steps:

- Pre-Trained Model: Use a model that is already trained on a large dataset for a particular task. This pre-trained model already knows the basic features and patterns that are suitable for related tasks.

- Base Model: The pre-trained or base model comprises layers that have processed data to know about hierarchical representations, including low-level to complicated features.

- Transfer Layers: Now use the base model to recognize layers that have the generic information applicable to both the old and new tasks. Usually, these layers are found on top of the network, capture comprehensive and reusable features.

- Fine-Tuning: You need to fine-tune the selected layers with the new task’s data. This is an important process as it retains the data of the past and adjusts parameters to meet the particular needs of the new task, enhancing accuracy and adaptability.

Also Read: Top 5 Frameworks for Deep Learning in 2025

When Can You Use Transfer Learning?

Mostly in machine learning, it is hard to develop generally applicable rules, but here are some cases where transfer learning can be used:

- There’s Not Enough Training Data: If there is a lack of training data for network training from scratch.

- Existing Network: If there is already a pre-trained network present that has been trained on a huge amount of data for a similar task.

- Exact Input: If both tasks, the old task and the new task, have the same input.

If an open-source library like TensorFlow is used to train the original model, then you can easily restore and retain some layers for your task. However, transfer learning works only when the features learned from the old task are general and can be utilized for another related task.

The input of the model must have the same size as it was trained with in the beginning. Otherwise, you need to incorporate a pre-processing step for input resizing to the required size.

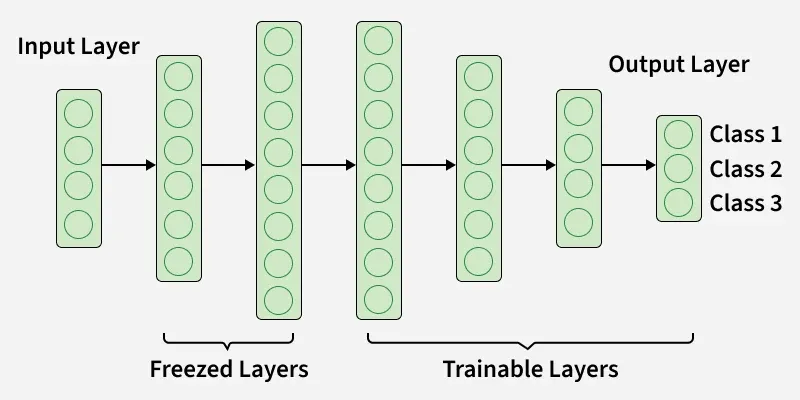

Transfer Learning Frozen Vs Trainable Layers

To help in adapting models effectively, two main components are used in transfer learning, which are:

- Frozen Layers: These are layers from the pre-trained model that remain fixed during fine-tuning. The layers preserve the learned general features from the original task and extract general patterns from the input data.

- Trainable Layers: As the name suggests, these layers are modified during fine-tuning to learn about particular features from the new dataset. This step allows the model to fulfill the unique requirements of the new task.

Related: What Is Paid Search Intelligence? Know All About It

Deciding Which Layers to Freeze or Train

The size and similarity of the target dataset are the deciding factors in what extent you need to freeze or fine-tune layers.

- Small & Similar Dataset: Smaller datasets that resemble the original dataset, you need to freeze the majority of the layers. To prevent overfitting, just fine-tune the last one or two layers.

- Small & Different Dataset: Different and smaller datasets need the fine-tuning of the layers nearer to the input layer. This assists the model to learn particular features for the required task from scratch.

- Large & Similar Dataset: In the case of large and similar datasets, unfreezing layers allow the model to adapt while preserving already learned features from the base model.

- Large & Different Dataset: You can fine-tune the whole model, which lets the model adapt to a new task, utilizing the comprehensive knowledge gained from the pre-trained model.

Advantages & Disadvantages of Transfer Learning?

Advantages of transfer learning include:

- It accelerates the training process. When using an already trained model, it can quickly and effectively learn on the second task since it already knows the patterns and features in the data.

- In case of limited data availability for the second task, transfer learning assists in preventing overfitting. This is because the model already learned about the basic features that will come in handy in the new task.

- Since the model already possesses the knowledge of the previous task, transfer learning can enhance performance on the second task.

Disadvantages of transfer learning include:

- In case the two tasks are very different or the data distribution between the two is dissimilar, then the pre-trained model will be unsuitable for the next task.

- In case the model is too fine-tuned on the second task, transfer learning can lead to overfitting. This is because the model may learn particular task-related features that are unfit for the new data.

- Both the fine-tuning process and the pre-trained model can be computationally expensive and may need specialized hardware.

Transfer Learning Applications

Due to its adaptability and cost-effectiveness, transfer learning has widespread use cases in real life.

Natural Language Processing (NLP)

NLP models such as ELMo, BERT, etc. are pre-trained on a wide range text corpora, and then fine-tuned for particular tasks, like question-answering, machine translation, and sentiment analysis.

Computer Vision

Image recognition tasks use transfer learning to pre-train models on huge image datasets. It is applicable for facial recognition, medical imaging, and object detection.

Finance

Transfer learning in finance is used for purposes like fraud detection, credit scoring, and risk assessment by transferring previously learned patterns from related financial datasets.

Healthcare

In this sector, transfer learning is used to develop medical diagnostic tools using general image recognition models’ knowledge to examine MRIs, X-rays, and other medical images.

Also Read: Top 5 Market Intelligence Platforms for B2B Success

Popular Examples of Transfer Learning

One of the most popular pre-trained machine learning models is the Inception-v3 model, trained for the ImageNet ‘Large Visual Recognition Challenge.’ This challenge required participants to classify images into 1000 classes, like ‘dishwasher,’ ‘Dalmation,’ etc.

Microsoft offers pre-trained models through the MicrosoftML R package and the microsoftML Python package. Available for R and Python development.

Some other popular transfer learning models are AlexNet and ResNet.

Summing Up,

By now, we know that transfer learning is a technique in machine learning where a previously-trained model is either fine-tuned or reused for a similar task. Its benefits include a reduction in data needs, quick training, cost-effectiveness, and performance enhancement.

Today, it is a foundational methodology for efficiently resolving varied machine learning problems, especially in deep learning and data-constrained environments. It brings machine learning innovations into real-world problems, specifically in domains where data, time, and resources are limited.

Related: AI & Common Sense, Will This Union Ever Be Possible?