Just as technology has evolved, so has the meaning of common sense over the years. In ancient Greece, Aristotle described common sense as the way a person observes the world around them. Today, common sense is an acquired sense that makes us go about in this world. Like, we know not to walk into a wall, or when the floor’s wet, not to run on it, etc.

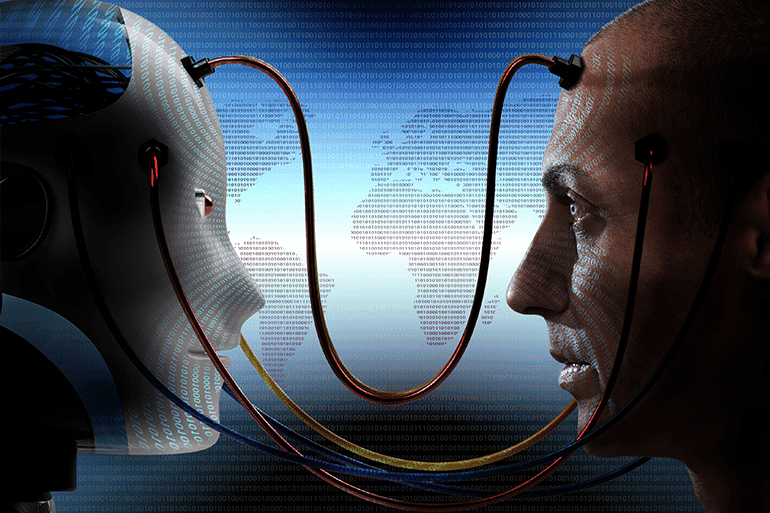

During the Industrial Revolution, the concept of the steam engine was foreign, and people were skeptical about it. Once they saw and understood the benefits, the invention was termed ‘revolutionary’ and was widely accepted. Similarly, the concept and usage of AI, people are still unsure about its long-term consequences. But the real question is, can AI ever have common sense? To understand this, first, we need to understand what common sense is and whether common sense reasoning in AI is required.

Common Sense

As I have just mentioned, there is certain acquired knowledge that we do not need training for. Like, killing a mosquito, but not hurting a butterfly. It is our, as humans, understanding of the world around us and how it works. These senses do not need any training; they come from observing, experiencing, and interacting with things every day.

Without common sense, the evolution of humankind may not be possible. We may not have invented such revolutionary concepts, objects, and technologies. The moment humans faced a problem, they used common sense to discover/ invent a solution for it. Like, fire burns hands, we need something to keep it far from our bodies, etc.

Since 2020, our biggest technological advancement has been artificial intelligence (AI). In this blog, we will be discussing how common sense in AI will be another anchor point for humans.

Why AI Lacks Common Sense?

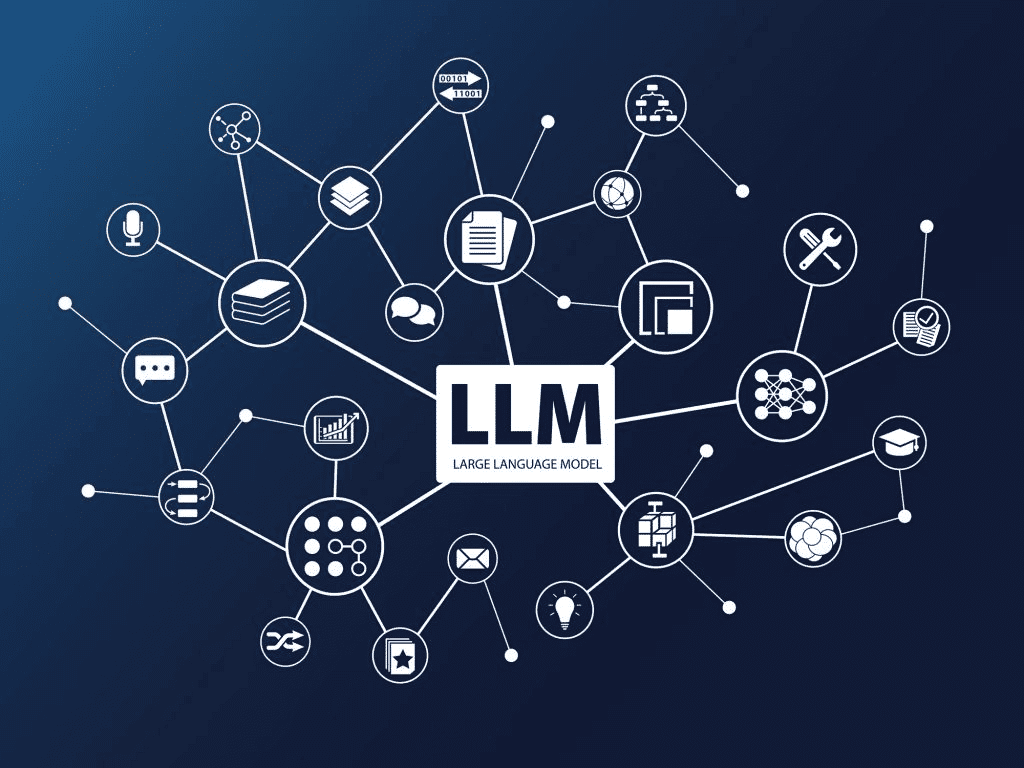

The basic concept of AI is Large Language Models (LLMs). Technically speaking, these LLMs are trained from the ‘textual’ part of our world and not from any other concepts.

The most user-centric form of AI is designed to predict the most probable word in response to a user question, as it has learnt from its training data. When you ask a question and get the most intelligent answer, it’s not because it understands your question. It is because it has seen a lot of similar questions during training and is mimicking the ‘perfect’ answer to your question.

How Does AI Answer Our Questions?

Long words are broken into sub-parts, and each one of these sub-parts is considered a token. Short words, like are, is, the, we, etc., are all 1 token each. Whereas huge words, such as ‘supercalifragilisticexpialidocious,’ can consist of 6-10 tokens.

Smaller tokens allow the LLM model to handle a variety of inputs, like unknown words, complex syntax, typos, etc. This might reduce the vocabulary size and need fewer memory resources.

In the case of larger tokens, a text is divided into smaller tokens. This requires fewer computational resources during processing.

This segregation allows LLMs to effectively handle a huge vocabulary. Also, other forms of data points form the training basis for AI, like images, videos, code, audio, and many more.

Now, imagine the entire amount of these small tokens in trillions! And these trillions of data points/ tokens constitute Petabytes of data.

For your reference, 1 Petabyte = 1,000,000 GB

Hopefully, now you can understand the volume of data that is used to train LLMs.

Also Read: Try Google’s Nano Banana: Best Ways to Use Nano Banana AI

Can AI Training Be Defective?

AI does not have real-world knowledge like we do (one of the many limitations of artificial intelligence), like spilling coffee on ourselves or waiting for someone. All its knowledge comes from extensive human knowledge, with some added refinement. Resultantly, it might pick up our lifestyle requirements rapidly and accurately, but it can never learn the associated experiences.

For some, it’s quantity over quality.

Some AI firms are trying to curb this knowledge gap and are feeding more and more data to the LLMs. More the merrier, I guess! Data in monumental proportions can make LLMs learn more about the ‘human ways’ and finally possess ‘common sense.’

Though it might seem achievable, mut scaling at such high levels can have its own problems.

LLMs are trained using thousands of GPUs (Graphics Processing Units), and these need huge data centers. Where do we find the space to construct these giant data centers? And how many more can we fit on our land? This just highlights the physical problems of digital expansion.

Power Concentration

AI’s applications are driven by how LLMs are trained and in what volume. The vastness and complexities of LLM training only allow a few major organizations to have their own LLMs, with their own biases.

In his research paper, “Common Sense Is All You Need,” Hugo Latapie suggests “a shift in the order of knowledge acquisition,” to develop AI models that “are capable of contextual learning, adaptive reasoning, and embodiment.”

As of yet, we haven’t come up with any solutions regarding this. But all experts agree on two vital requirements of the AI era: strict guidelines on developing AI, and a transparent training process, with the availability of public data points.

Also Read: Apple Intelligence Explained: Features, Setup, and Usage

Does Artificial Intelligence Know Common Sense?

When I typed in the exact question on Perplexity, the answer was, “AI does need common sense to reach true autonomy and match the flexibility, adaptability, and reliability of human intelligence, especially for real-world decision making and reasoning tasks.”

This concretizes our discussion that, till now, AI does not possess human-level common sense. Thought it might sound comforting, but it might be good for AI to have some sort of common sense. Let’s clarify this thought with an example,

Have you ever heard about Nick Bostrom’s “paperclip maximizer?”

This first appeared in Bostrom’s 2003 paper, “Ethical Issues in Advanced Artificial Intelligence.” In his hypothesis, Nick says that AI to provide a solution for the most insignificant queries, like “maximising the production of paperclips in the world,” can result in the eradication of the human race for efficiency’s sake.

In this case, for AI, all the energy of the world should be focused on the paperclips and not the humans.

It’s definitely an ethical problem, but from a single perspective. For people with common sense, the first question will be, “Who will even use those paperclips in the absence of humans?”

This is common sense. Well, not so evident and not so common for AI.

So yes, AI does need some common sense, especially in the larger context of things.

Introduction to Artificial General Intelligence (AGI)

AGI is still a theoretical concept that introduces a state where machines have human-like intelligence. Just like the human brain, AI will be able to understand, learn, and apply knowledge across a variety of tasks.

For big organizations that are working on AI advancements, the aim is AGI. With this, humanity will see huge benefits, like when AI starts thinking and applying thought to work, humans will have negligible work or just the requirement of managing them.

Till now, common sense has been the only hurdle in this path of AI development.

Concept of the Ever-Evolving Common Sense

As per predictive analysis, newer technologies like AI and machine learning will have a significant impact on the term ‘common sense.’ It will be shaped by our ever-evolving surroundings, including technology, global dynamics, and society.

AI and ML are already transforming various sectors, like healthcare, finance, transportation, education, and the rise of autonomous devices. This will also need a re-examination of the ‘common sense’ approach in these areas, as new technologies will introduce fresh solutions and some unexpected challenges.

As humans, we can’t let go of our common sense. We are all using AI in some manner or other to generate reports, provide insights, etc. And that’s fine until AI forgets how the real world works.

Humans not applying common sense combined with AI’s solution can have a disastrous outcome, from time wasted to unethical results, and other risks when AI lacks common sense. The more data in question, the harder it will be to pinpoint minor errors in the AI system. As of yet, we cannot completely trust AI and must be careful when using it for major tasks. The best way to describe it is, when you are using AI today, you are not asking for common-sense solutions; instead, you are just outsourcing thoughts. So take only what’s needed, and do not expect anything more in return.

Related Reads: Meet Mia Zelu: The Rise of Virtual Influencers in Branding

Also Read: What Is Paid Search Intelligence? Know All About It